Software 3.0

The evolution from human instructions to neural networks to agentic intelligence.

AI has the brain, but it has no hands.

Last updated Sep 18, 2025

The Evolution of Software

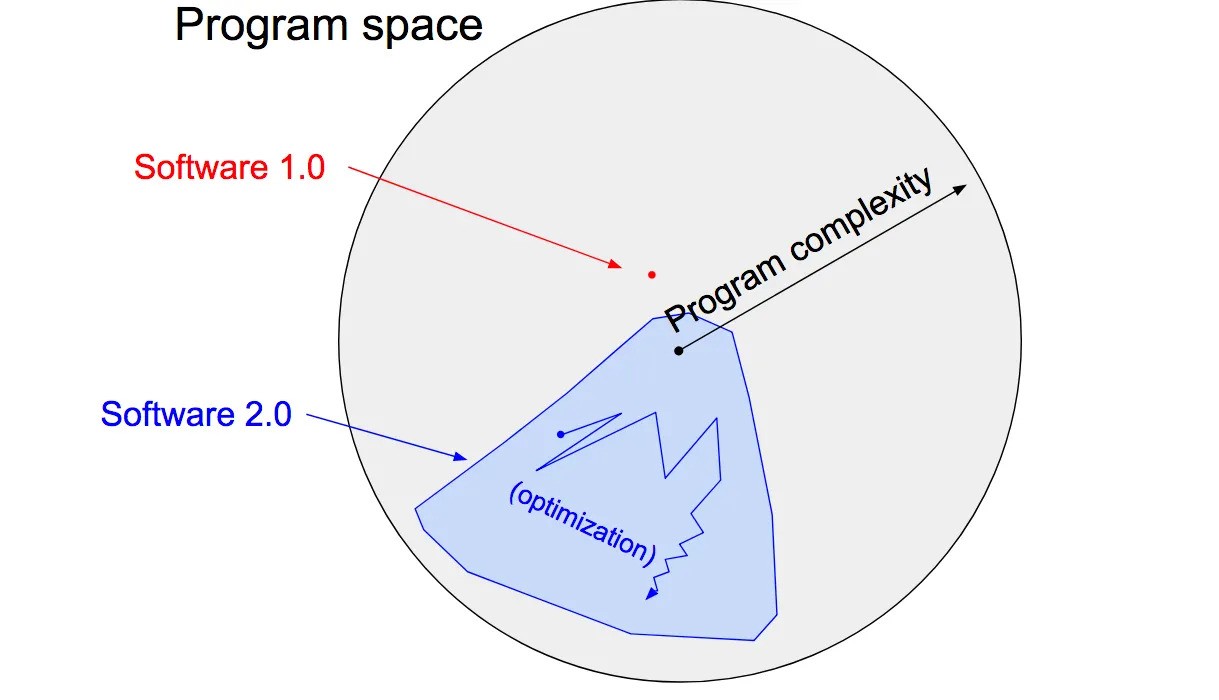

Software 1.0 → 2.0

From explicit instructions to neural networks (Karpathy, 2017)

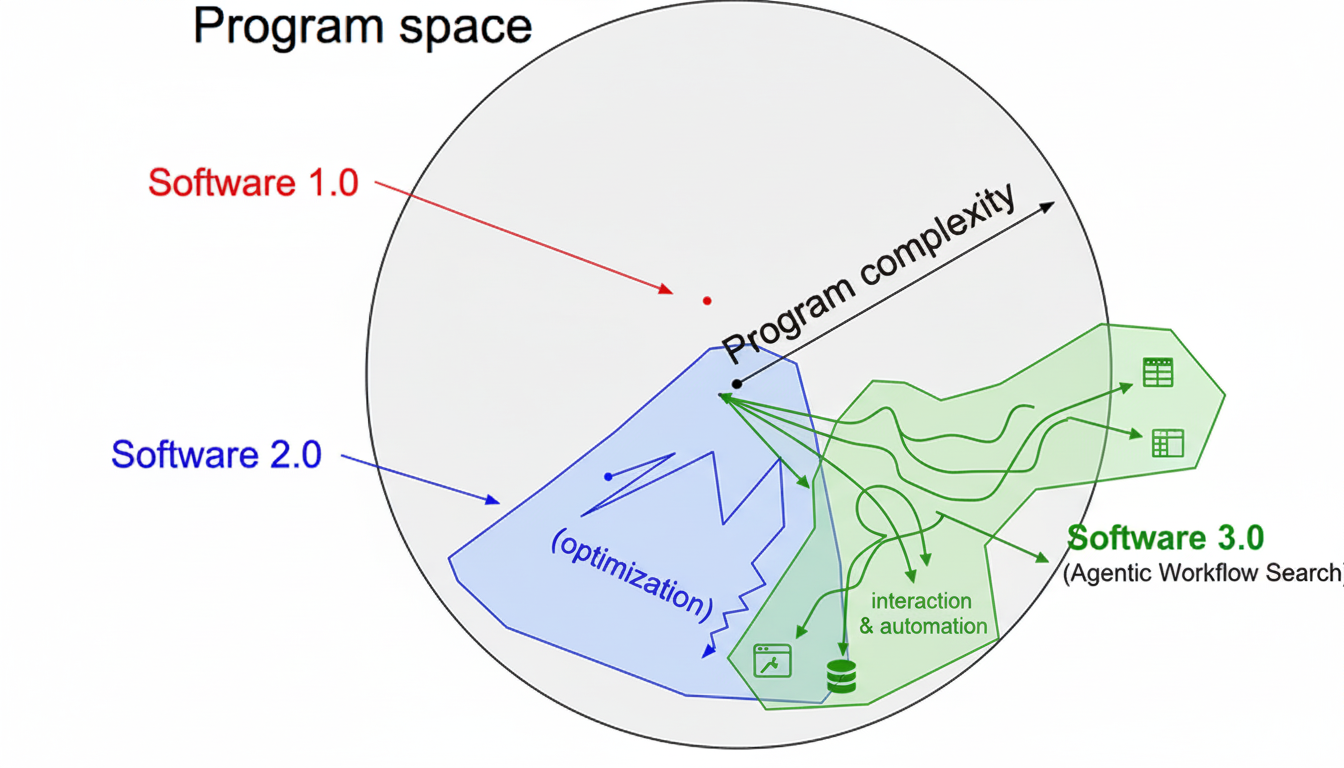

Software 3.0

Agentic workflow search: AI interacts with the world

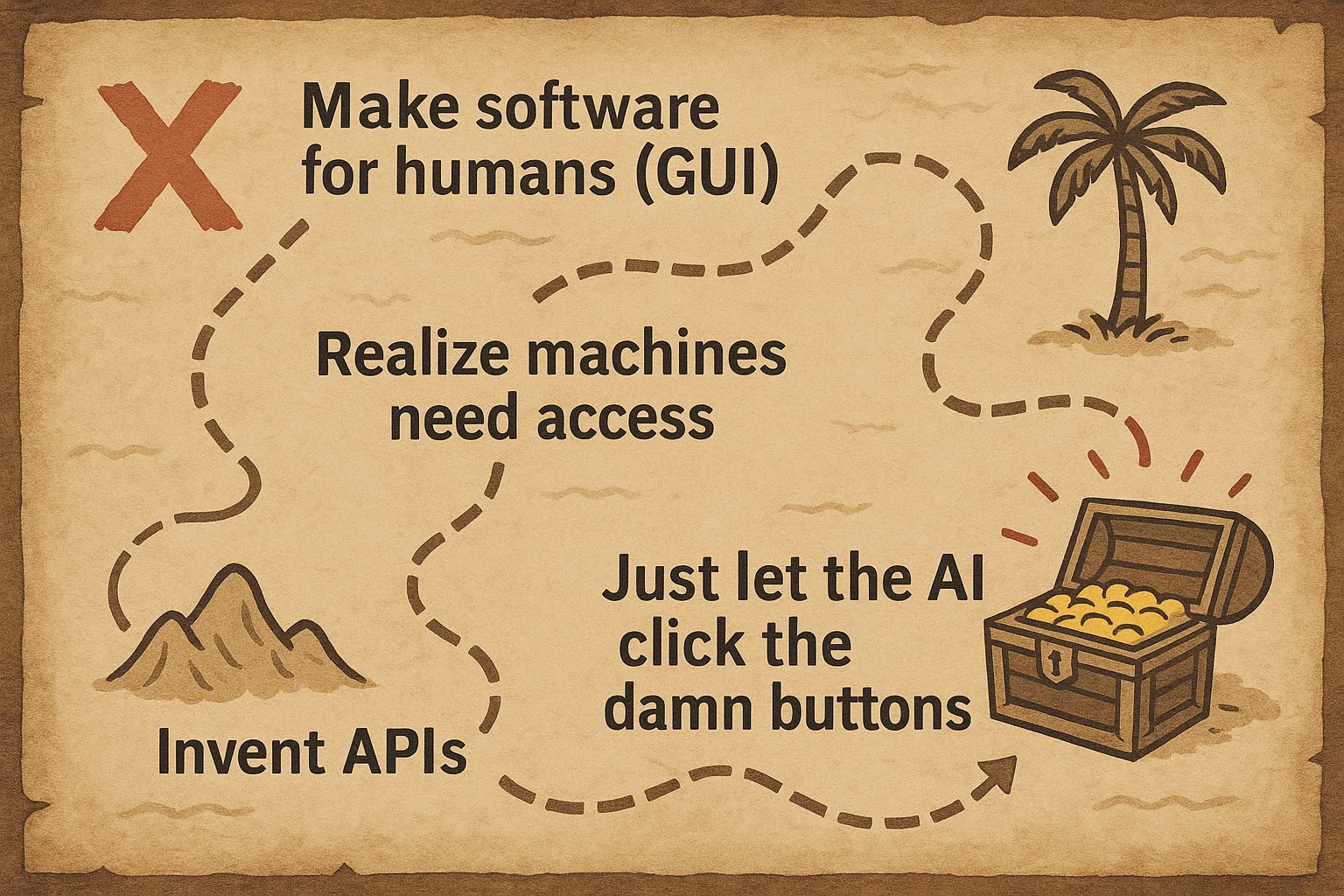

The Path Forward

Just let the AI click the damn buttons

The interface hasn't changed much

We’re still using

- Mouse (1964)

- Keyboard (1868)

- Windows (1985)

Meanwhile

- AI can write novels

- Robots can do backflips

- Cars can drive themselves

But we’re still here: Click. Drag. Type. Repeat.

The average knowledge worker

- ~76 tabs opened daily

- ~1,100 app switches

- ~5,200 mouse clicks

- ~22,000 characters typed

We're flying a space shuttle with horse‑and‑buggy controls.

This isn't a UI problem. It's a paradigm problem.

The screen is the universal interface. All software flows through this surface.

We learned with our hands. We shaped stone into edges. The edge shaped us back. Tool and mind took turns.

A flat rock became a table. Then paper. Then glass. Same move each time: make a surface that can hold a thought outside the head. Once outside, we can point, compare, erase, try again.

The screen is today’s flat stone. Not magic. A plain place to lay out steps. If a person can do it there, a patient machine can too. Not perfect. But honest enough when it shows its work.

People ask for big leaps. Nature prefers short ones. Scratch a mark. Look. Scratch again. Progress is the habit of small tests in public.

Here is the simple pattern: pick a surface; mark it; try; check; keep what works. The marks changed: cuts, ink, pixels. The pattern did not.

Most value sits behind slow tables and stubborn forms. We wait for clean APIs and perfect plans. We forget that people already use these tools by hand. The humble path is to watch the hands and copy their care.

Keep it gentle. Short runs. Small steps. Bounded retries. When something fails, leave a receipt. The point is not to impress. The point is to carry the work forward with fewer surprises.

Software started as explicit instructions. If-then-else. Functions calling functions. Humans told computers exactly what to do, step by step. Call this Software 1.0.

Then neural networks. Instead of writing code, you show examples. The network finds the pattern. Karpathy called this Software 2.0. The code writes itself. The weights are the program.

But there's a problem. AI can write novels and solve equations, but it can't click a button. It understands everything but can touch nothing. It has a brain but no hands.

Software 3.0 is the obvious next step. Give AI hands. Let it interact with the world through the same surface we do: the screen. Every pixel becomes accessible. Every interface becomes an API.

The screen is a skeuomorph - a digital imitation of physical surfaces. Buttons that look pushable. Folders that look foldable. Trash cans that look fillable. We built these visual metaphors for humans. Now AI needs to understand them too.

Think about it: Every piece of software already has an interface. Twenty years of legacy systems. Millions of applications. All accessible through the same protocol: look, click, type. Why rebuild when you can just teach AI to use what's already there?

The surface is the API. The screen is the protocol.